I originally planned to participate in this year's Mardini challenge, but my plans changed, and I ended up being busy with other things for most of the month. Although I looked up the daily challenges multiple times and even came up with ideas, I never started a single project.

However, the idea for day 9 stuck with me. I envisioned two light bulbs smashing into each other and breaking into several glass shards, with each shard displaying a completely intact light bulb on its surface.

Although I had a clear picture in my head, I wasn't sure how to execute it. I remembered how game engines create mirror or portal effects by using multiple cameras, which project their images onto a surface. However, I'm not aware of a similar technique using path-traced rendering. If you know more than me, please let me know.

Instead, I decided to create the effect in compositing. Even though Mardini was over, I forced myself to use Houdini's compositing tools for this. Although I only have a little experience with compositing, I had never used COP before.

Simulating Glass

I fractured the glass using RBD Material Fracture to get those nice edge details, and then simulated the crash using the SOP level bullet solver. Both bulbs share the same fracturing but are rotated differently in the simulation. To create the tiny glass shards, I created a secondary particle simulation.

Rendering the main simulation

I split the rendering into multiple layers. The first one consisted only of the glass shards and an HDRI from Polyhaven.

However the rendering came with three important AOVs: Reflection and 2 different cryptomattes. The first cryptomatte has an ID for every piece of shattered glass, while the second one has an ID for the inside, outside and border of all pieces.

I was desperate to render everything with Karma XPU since my rig has a beefy GPU. But Karma XPU doesn't support cryptomattes yet (v19.5). I could've rendered the cryptomatte in CPU mode and the rest in XPU mode, but I struggled a lot with that. Maybe it's just me but I wasn't able to get equal motion blur from Karma XPU and Karma CPU.

Thankfully I still had a Redshift license lying around. To my surprise, Redshift can be used as an USD delegate now. GPU Rendering, Cryptomatte, USD Delegate. Win, win, win. I was very happy to not fall back to OBJ based rendering since everything was set up neatly with USD already.

Wire simulation

The glowing wires inside the bulbs are simulated as vellum hair. Nothing really special here. High stiffness combined with super low dampening for the wobbly effect. I extracted the transformation from the previous simulation and used it as the main motion for the vellum simulation.

The ClothDeform SOP made it easy to deform the actual wire with the simulated hair.

Lots of bubbles

Now for the weird, hacky part. I used the bulb's inverse transformation to bring the wires back to the worlds origin while keeping their wobbling.

With a Measure SOP I isolated the 30 biggest pieces of the glass simulation and applied their transformation to 30 copies of non-broken bulbs together with the previously simulated wire.

This gave me lots of overlapping bubbles. For rendering however I needed all bulbs separately. With the power of PDG, I rendered out one bubble after another.

I matched the names of the rendered out files to the ID's of the cryptomatte from the first rendering.

Combining the bubbles

I ended up with renderings for 30 glowing bubbles over 72 frames and needed to mask each one with a corresponding cryptomatte ID. Tedious and boring if done manually, but fast and easy with an Image Network and a little Python

I simply looped over the file structure of the render folder, create a File Node COP fore every bubble and a masked it with the same cryptomatte Id. Thats why I made sure to match the names of the rendered files to the cryptomatte ID's.

View the code

import hou

from nodesearch import parser

from os import listdir

from os.path import isdir, join

def COPCreateBulbs(kwargs):

subnet = kwargs["node"]

base = subnet.parm("base_path").eval()

version = subnet.parm("version").eval()

### Cleanup

matcher = parser.parse_query("shard*")

for node in matcher.nodes(subnet, recursive=False):

node.destroy()

### Create

shards = [f for f in listdir(base) if isdir(join(base, f))]

cryptomatte = subnet.node("cryptomatte")

for shard in shards:

if isdir(base + "/" + shard.replace("shard", "piece")):

name = shard.removeprefix("piece")

parts = name.split("_", 1)

pieceId = parts[0]

bulbId = parts[1]

node = subnet.createNode("rowdy_extract_piece", "shard{0}_{1}".format(pieceId, bulbId))

node.parm("bulbId").set(bulbId)

node.parm("pieceId").set(pieceId)

node.parm("version").set(version)

node.setInput(0, subnet.indirectInputs()[0])

merge = subnet.node("merge_bulb_{0}".format(bulbId))

if merge:

merge.setNextInput(node)

node.moveToGoodPosition(relative_to_inputs=True, move_inputs=True, move_outputs=True, move_unconnected=True)

A more efficient way would've been to write out a cryptomatte JSON and utilize it in the python script. No idea how to get a cryptomatte JSON from Redshift though. Anyways, this code produced a lot of COP nodes.

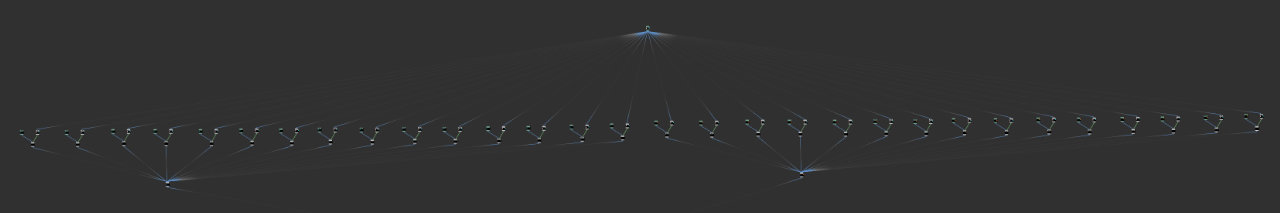

COPS isn't the fastest thing in houdini, and generating dozens of cryptomatte nodes made it creeping slow. Saving the merged layers to a proxy file fixed the performance and also saved me from looking at this monster of a network ever again.

Reflections, flickering and glow

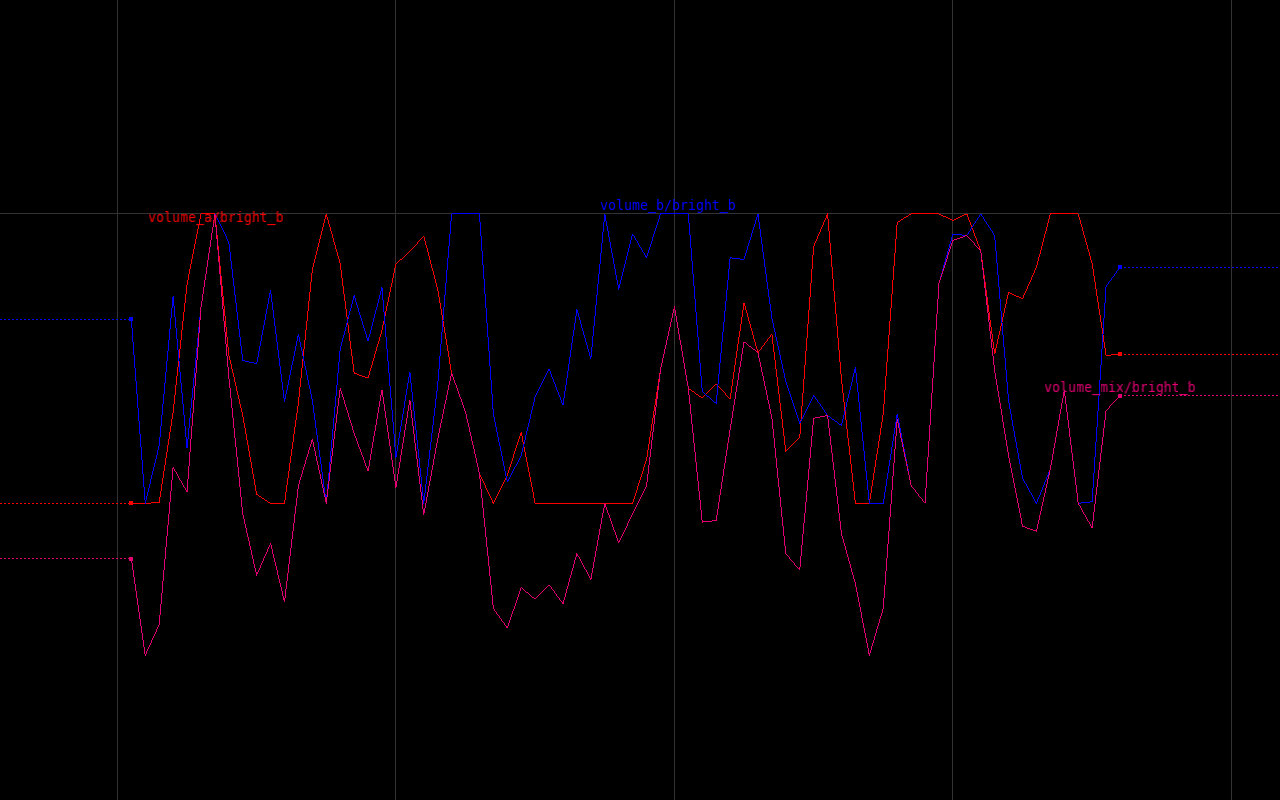

Initially I planned to have the flickering lights rendered along with the bulbs, but instead I rendered them with a constant intensity and controlled the flickering in post. By using CHOP in COP I got really nice flicker curves for the Brightness COP.

For the glow, I bumped the brightness of the wires, created three levels of blur and used the same CHOPnet to make it flicker in sync with the wires.

Volumes

The volumes are created with the SOP Pyro Solver both for the fog in the background and the dust of the breaking glass shards.

The surface of the glass shards act as mesh-lights in the volume rendering.

Final notes

I'm glad that I was able to fit in a small project like this. It provided a nice break from all the simulation work I do at my job and gave me an opportunity to properly render something instead of relying on viewport OpenGL renderings all the time. I always kept good distance from CHOPS before but this time I managed to scratch its surface a little. It's actually less scarry then I imagined. Overall, it was great to work on something small and not spend weeks or even months on the same project. I definitely should do more of these small projects in the future.